Lumen Renderer

Project Details

- Project: Custom path tracing tech demo project

- Language: C++ / CUDA / OptiX 7

- Team: 6 graphics programmers

- Roles: Lead, graphics & generalist programmer

-

Dates: 07/10/2020 - 02/07/2021

Contribution

I fulfilled multiple roles on the team for the Lumen Renderer. Most prominent was my input as project lead, product owner and generalist programmer.

I had a big part in the planning of the project's goals and milestones and managed the team throughout the whole process. I also did a lot of programming however. Initially as an engine programmer working on improving the initial CPU-side of the codebase which is based on an early version of my Manta Engine, and later as graphics and pipeline programmer.

I have been involved in implementing features such as asset loading, indirect illumination and the numerous smaller features relating to pipeline design and implementation.

Naturally not everything I worked on made it into the final build. Some notable features we were planning and that I did a lot of work on, but that had to be cut were DLSS upscaling and Denoising. We were planning to implement NRD denoising but in the end, only a very rough implementation of the OptiX denoiser made it into the final product and unfortunately no upscaling of any kind.

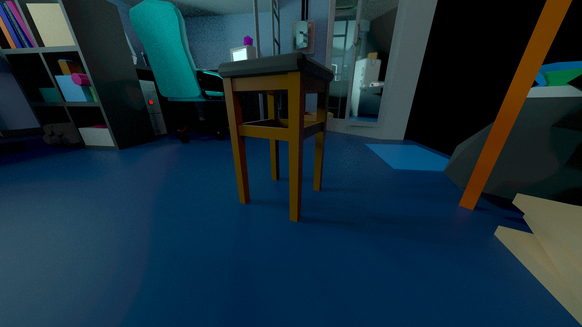

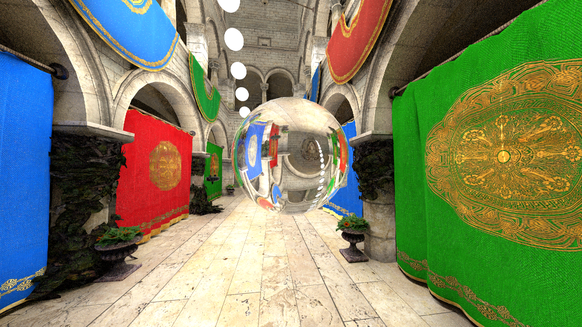

As a result of denoising and upscaling not having been implemented fully, all screenshots you see on this page are "raw" renders without any kind of filtering or other enhancements.

Experience

The Lumen Renderer marked my very first experience with a multitude of things. It has been the longest continuous project I have worked on, it has been my first time working on a GPU accellerated path-tracer, it has been my first time writing CUDA code and dealing with OptiX and tech and planning-wise it has been the most challenging project I have worked on so far.

This project has taught me what it really means to write code for the GPU and design a graphics pipeline, and has helped me understand numerous graphics concepts and terminology. Lastly it was also a valuable learning experience to manage our team and project, especially considering everyone was still working remotely until the end of the project due to Covid.

Lumen is basically a full path-tracer. There are no hybrid/raster tricks used, and everything is GPU-accellerated using DX11 and OptiX 7.1. The primary goal was to create a volumetric path-tracing tech demo optimized for RTX hardware. Over the course of the project we deviated slightly, putting less emphasis on the voxel-based volumes and more on the engine part of the project.

What we ended up with can be best described as path-tracing engine that lets you render any gltf scenes in real-time on RTX hardware and allows for homogenous volumes within that scene.